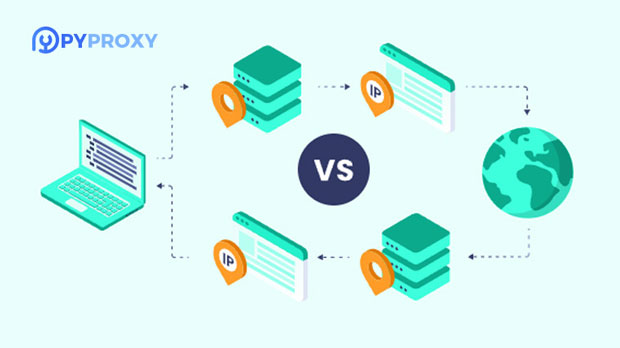

In the current era of digital privacy concerns and the rapid advancement of internet technologies, protecting personal data while browsing has become a significant priority for many internet users. Among the various tools designed to safeguard online privacy, two notable names that often come up are PYPROXY and DuckDuckGo Proxy. Both of these proxies are used by individuals looking to shield their identity from prying eyes, offering different approaches to privacy and data protection. When considering the effectiveness of these two proxies in terms of connection success rate in a wireless network environment, there are several factors to consider. This article will provide an in-depth comparison between Pyproxy and DuckDuckGo Proxy, analyzing their features, security levels, performance, and the success rates of their connections in wireless environments. By examining these key points, users can make an informed decision about which proxy service better suits their privacy needs and browsing habits. Introduction to Pyproxy and DuckDuckGo ProxyBoth Pyproxy and DuckDuckGo Proxy are privacy-oriented services designed to anonymize users' internet activity. However, the way each tool operates and the types of users they attract can vary significantly. Pyproxy is primarily a Python-based proxy server that can be configured to route internet traffic securely, whereas DuckDuckGo Proxy is a product from the well-known search engine company DuckDuckGo, which focuses on minimizing data tracking during searches and web browsing. In a wireless network environment, connection success is crucial, especially as wireless connections are often less stable than wired ones. Factors like latency, interference, and bandwidth limitations can affect how well these proxies perform. Understanding how Pyproxy and DuckDuckGo Proxy handle these challenges is essential for users who rely on consistent, secure internet access.Pyproxy in Wireless Network EnvironmentsPyproxy is a popular choice for users who prefer open-source solutions that they can customize to their specific needs. As a Python-based proxy, it is highly configurable, allowing users to adjust settings for various network configurations. When it comes to wireless networks, the success rate of Pyproxy’s connection is influenced by several factors, including the network's bandwidth, signal strength, and any interference present. 1. Customization and Flexibility: One of the main benefits of using Pyproxy is its flexibility. Users can configure the proxy server to use different types of encryption, authentication methods, and routing protocols. This level of customization can help optimize the connection success rate in varying wireless network environments, especially when dealing with issues like fluctuating signal strength or interference.2. Performance and Stability: Despite its customization capabilities, Pyproxy may experience performance issues in low-bandwidth or high-interference wireless environments. The proxy relies on Python, which is not the most lightweight programming language for handling high-volume traffic. This can result in slower connection speeds and intermittent disconnections, particularly on weaker or congested wireless networks.3. Security Features: Pyproxy provides users with strong security options, including SSL/TLS encryption and advanced tunneling techniques. These features are particularly useful for protecting data from eavesdropping on unsecured wireless networks. However, the level of encryption and the types of protocols chosen can impact connection success. For example, stronger encryption methods can increase the time it takes to establish a connection, which might reduce the overall connection success rate, especially in unstable wireless environments.DuckDuckGo Proxy in Wireless Network EnvironmentsDuckDuckGo Proxy, on the other hand, is more focused on privacy through a simple, user-friendly interface. The company’s proxy service is often used in conjunction with its search engine to block tracking scripts and prevent personal data from being logged. DuckDuckGo’s proxy service is designed to be easy to use, with little to no configuration required, making it ideal for users who want quick, hassle-free protection.1. User-Friendly Setup: Unlike Pyproxy, DuckDuckGo Proxy does not require any configuration or setup from the user. It automatically routes internet traffic through its secure servers, ensuring that all data sent and received is protected. In wireless network environments, this simplicity can lead to a higher success rate for users who may not be familiar with advanced network configurations.2. Connection Stability: DuckDuckGo Proxy tends to perform better in wireless environments that have high levels of interference or unstable connections. The service uses a range of reliable servers that are optimized to handle varying network conditions, which helps ensure a more consistent connection. The proxy’s architecture is designed to automatically switch to a more stable server if one becomes overloaded, further improving the connection success rate.3. Privacy and Security: DuckDuckGo Proxy ensures that no personal data or search history is logged, which makes it an attractive option for users concerned about privacy. The service uses HTTPS to encrypt communication, which is especially important in wireless environments where data might otherwise be susceptible to interception. However, the level of security provided by DuckDuckGo Proxy may not be as robust as Pyproxy’s, especially in cases where highly sensitive information needs to be protected.Factors Affecting Connection Success RateSeveral factors can influence the connection success rate of both Pyproxy and DuckDuckGo Proxy in wireless environments. These include network conditions, encryption methods, server location, and the specific requirements of the user. Below are some key elements that can affect the success of each proxy:1. Network Bandwidth and Latency: Wireless networks often have lower bandwidth and higher latency than wired networks, which can significantly impact the performance of both proxies. For Pyproxy, the more customizable nature of the service means that users can adjust their settings to better suit the bandwidth and latency of their wireless network. In contrast, DuckDuckGo Proxy, while simpler to use, may experience slower speeds if the network connection is particularly poor.2. Signal Strength and Interference: The quality of a wireless connection can vary depending on factors like signal strength and interference from other devices. Pyproxy’s more complex setup might offer more room for tweaking these variables, but it also means that users may need to spend time fine-tuning the configuration. DuckDuckGo Proxy, by contrast, is more suited for users looking for a reliable and quick solution, with less emphasis on customization.3. Server Load and Location: Both proxies rely on external servers to route traffic. The success rate of the connection can be influenced by server load and geographic location. Pyproxy allows users to select their preferred servers, potentially improving the connection success rate if the user can choose a server closer to their location. DuckDuckGo Proxy, while efficient, may not offer this level of control, and users might experience lower speeds or occasional drops in service if the nearest server is overloaded.Which Proxy Is Better for Wireless Networks?Choosing between Pyproxy and DuckDuckGo Proxy ultimately depends on the specific needs of the user. For those who prioritize complete control over their proxy setup and are willing to invest time in optimizing their connection, Pyproxy may be the better option. It offers extensive customization options that allow users to tweak settings for better performance in wireless environments. However, it requires more technical know-how and may be less stable in networks with high interference.On the other hand, DuckDuckGo Proxy is ideal for users who prioritize ease of use and don’t want to spend time configuring their proxy. It performs well in typical wireless environments, offering stable connections without the need for complicated setups. While it may not provide the same level of customization or security features as Pyproxy, it strikes a good balance between privacy and ease of use.In conclusion, both Pyproxy and DuckDuckGo Proxy offer valuable features for users seeking privacy protection in wireless networks. The decision between the two largely depends on the user’s technical expertise and specific needs. Pyproxy provides a more flexible solution that can be customized to suit particular network conditions, but it requires more effort to configure and maintain. DuckDuckGo Proxy, on the other hand, provides a simple, user-friendly solution that is ideal for casual users who need quick, reliable privacy protection without the hassle of configuration. Ultimately, the best choice will depend on the user’s priorities, whether that’s control over settings or convenience and ease of use.

Oct 13, 2025