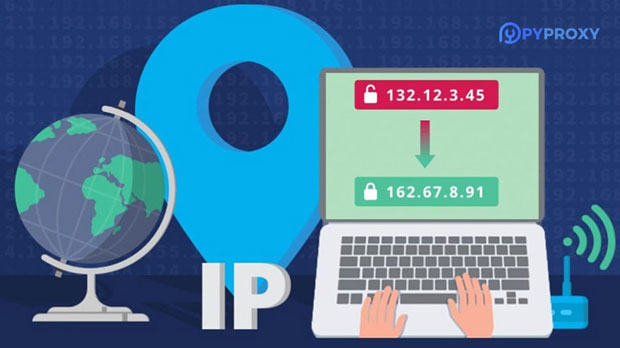

When it comes to using proxies for residential purposes, many users find themselves choosing between free residential proxies and cheap residential proxies. While both options offer the ability to mask IP addresses and surf the web anonymously, there are important distinctions that set them apart. Free residential proxies typically come with limited features, higher risks, and questionable reliability, whereas cheap residential proxies offer more robust functionality, enhanced security, and better support. Understanding these differences is crucial for businesses and individuals who need proxies for tasks like web scraping, online marketing, or securing their browsing experience. In this article, we will dive deeper into these two types of residential proxies, highlighting their advantages, disadvantages, and use cases. 1. What Are Residential Proxies?Residential proxies are IP addresses provided by real residential devices, such as mobile phones, laptops, or routers, making them appear as if they are coming from a real user rather than a data center. These proxies are preferred over datacenter proxies because they offer higher anonymity and are less likely to be detected or blocked by websites. Residential proxies can be used for various purposes, including web scraping, accessing geo-restricted content, and ensuring online privacy.2. Free Residential ProxiesFree residential proxies, as the name suggests, are typically available at no cost to users. However, they come with several limitations that can significantly impact their reliability and usability. Below are the key characteristics of free residential proxies:2.1 Limited AvailabilityFree residential proxies often have a limited pool of IP addresses, which means that the proxy servers may become overcrowded. As a result, users may experience slower connection speeds and frequent disconnections.2.2 Security RisksSince free residential proxies are often offered by unknown providers or public networks, they pose significant security risks. Users may unknowingly expose their data to malicious actors or cybercriminals. These proxies may also be used for illegal activities, and users' information could be sold or exploited.2.3 Unstable PerformanceThe performance of free residential proxies is typically unreliable. You may face frequent downtimes, slow response times, and issues with IP rotation. This can hinder your online tasks, such as web scraping or accessing content that requires consistent, uninterrupted access.2.4 Limited SupportSupport for free residential proxies is often non-existent or very limited. If you encounter issues, there may be no way to resolve them, leaving you stuck with an unreliable proxy.3. Cheap Residential ProxiesCheap residential proxies, in contrast to free ones, are paid services that offer a more reliable and secure proxy experience. Although cheap residential proxies are budget-friendly, they come with a set of benefits that make them a far superior choice compared to free alternatives. Below are the main advantages of cheap residential proxies:3.1 Better ReliabilityCheap residential proxies typically have a larger pool of IP addresses and better infrastructure, which results in a more stable and reliable experience. With these proxies, you can expect faster connection speeds and less downtime, even during high-demand usage.3.2 Enhanced SecuritySecurity is one of the biggest advantages of cheap residential proxies. These proxies are less likely to be associated with malicious activities, ensuring that your online privacy is protected. Since you are paying for the service, providers are more likely to maintain a secure environment, ensuring that your data is not compromised.3.3 Better Customer SupportCheap residential proxy providers typically offer customer support to assist users with troubleshooting, setup, or any other issues. This is a huge advantage over free proxies, where users often receive no support at all.3.4 Advanced FeaturesCheap residential proxies usually come with advanced features, such as faster IP rotation, customizable proxy settings, and geo-targeting options. These features can be particularly useful for tasks like web scraping, market research, and accessing restricted content.4. Comparison: Free vs. Cheap Residential ProxiesNow that we have explored the key characteristics of free and cheap residential proxies, let's compare them side by side to understand which option is best suited for your needs.4.1 CostThe most obvious difference between free and cheap residential proxies is the cost. Free proxies are, of course, free, but they come with various limitations and risks. Cheap residential proxies, on the other hand, offer a more reliable and secure experience for a reasonable price. The cost is usually a small investment compared to the value they provide.4.2 Reliability and PerformanceWhen it comes to performance, cheap residential proxies are far superior. They offer better connection speeds, fewer downtimes, and more stable IP addresses. Free proxies, however, tend to be slow, unreliable, and often experience interruptions in service.4.3 SecurityCheap residential proxies are typically more secure because they are provided by reputable companies that take extra measures to ensure user safety. Free residential proxies, however, often come with significant security risks, as the providers may not have the resources or incentive to secure your data.4.4 Use CasesFree residential proxies may work for light, non-critical tasks, such as browsing the web anonymously or testing certain websites. However, for more serious tasks like web scraping, running online ads, or conducting market research, cheap residential proxies are a far better choice due to their reliability and performance.5. When to Choose Free Residential ProxiesDespite their drawbacks, free residential proxies can be suitable for certain use cases. If you're only looking to perform a one-off task that doesn't require high performance or security, free proxies may suffice. They can also be a good option for testing basic functionality before committing to a paid service.6. When to Choose Cheap Residential ProxiesIf you're running a business or need proxies for more critical and ongoing tasks, such as web scraping, social media automation, or geo-targeted marketing, cheap residential proxies are the clear choice. They offer a much higher level of reliability, security, and support, ensuring that your tasks are completed efficiently without interruption.In conclusion, while both free residential proxies and cheap residential proxies offer the ability to mask your IP and protect your online identity, they cater to different needs. Free residential proxies come with many limitations, including slow performance, security risks, and a lack of support, making them unsuitable for most business and professional purposes. Cheap residential proxies, on the other hand, provide a much more reliable and secure solution, making them an excellent choice for users who require high performance and enhanced security for tasks like web scraping, online marketing, and privacy protection. Ultimately, the choice between free and cheap residential proxies depends on your specific needs and budget, but for serious and long-term use, cheap residential proxies are undoubtedly the better option.

Oct 17, 2025