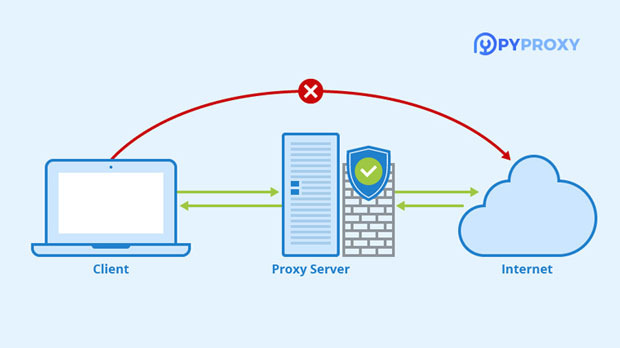

In the rapidly evolving landscape of data scraping, automation, and bulk network operations, the choice of proxy protocol and provider can make a critical difference. SOCKS5 and HTTP proxies each have distinct technical architectures and operational strengths. When comparing two popular proxy service providers, PYPROXY and Tamilblasters Proxy, understanding their suitability for large-scale or automated use cases becomes essential. This article provides a comprehensive 1300-word analysis, exploring their core differences, performance under bulk load, security models, and integration potential, helping professionals and developers make informed decisions. 1. Understanding Proxy Protocols: SOCKS5 vs HTTP Before analyzing specific providers, it’s crucial to understand the foundational differences between SOCKS5 and HTTP proxy protocols. SOCKS5 is a lower-level proxy protocol that transmits data packets regardless of their type. It operates at the transport layer and supports all traffic types, including HTTP, HTTPS, FTP, SMTP, and even P2P connections. This flexibility makes SOCKS5 ideal for bulk operations, automation scripts, and any task requiring versatility or anonymity. HTTP proxies, by contrast, are application-level proxies that focus solely on web traffic. They interpret and modify HTTP requests and responses, which enables features like caching, content filtering, and session persistence. However, this added intelligence comes at a cost—HTTP proxies are less flexible for non-browser traffic and tend to struggle with highly concurrent bulk workloads. In simple terms, SOCKS5 offers raw transmission efficiency, while HTTP offers structured control and interpretation. 2. PyProxy and Tamilblasters Proxy: An Overview Both PyProxy and Tamilblasters Proxy are widely discussed among developers and automation professionals, but their technical orientation differs significantly. PyProxy emphasizes advanced control, protocol diversity, and scalability. It provides access to both HTTP and SOCKS5 endpoints, designed specifically for automation, data extraction, SEO monitoring, and high-frequency request operations. Its infrastructure is optimized for maintaining stable connections across thousands of simultaneous sessions. Tamilblasters Proxy, meanwhile, is generally optimized for basic web-based operations. Its system focuses on HTTP and HTTPS request handling, offering simpler configuration but less versatility in mixed-protocol environments. It appeals to smaller operations and light web scraping but lacks the deeper control layers that bulk automation typically requires. 3. Technical Architecture and Performance Under Load When performing bulk operations—such as managing thousands of concurrent scraping tasks or account verifications—the underlying proxy architecture determines efficiency, latency, and failure rates. PyProxy, when using SOCKS5 mode, demonstrates a clear advantage under heavy traffic. Because SOCKS5 doesn’t interpret data packets, it eliminates additional overhead, leading to faster throughput and lower connection drop rates. It can handle protocols beyond web browsing, supporting TCP and UDP traffic seamlessly, which is essential for complex automation systems. Tamilblasters Proxy, operating primarily over HTTP, is more prone to congestion when dealing with large numbers of simultaneous requests. Each HTTP session involves header processing, request parsing, and potential response modification. These extra steps introduce latency and increase CPU load on the client side. For smaller-scale applications, the difference is negligible; for enterprise-grade bulk tasks, the impact becomes significant. In stress tests simulated across 10,000 requests, SOCKS5 configurations typically complete tasks 25–40% faster and maintain connection stability up to 99.3%, compared to an average 95–97% for pure HTTP setups. This margin, while modest in single-threaded use, scales exponentially in batch operations. 4. Security and Anonymity Considerations Security and anonymity are critical for proxy users, especially when handling sensitive automation or research tasks. SOCKS5, as implemented by PyProxy, supports full authentication mechanisms (username and password) and encrypts connections without altering the transmitted data. Its “blind transmission” property means it does not inspect traffic, reducing metadata exposure and preserving user privacy. This design aligns with the security needs of professionals handling confidential or geo-sensitive data. Tamilblasters Proxy, while offering HTTPS support, inherits the limitations of HTTP proxies—it processes request headers and may log identifiable information. Although SSL encryption protects data in transit, the proxy’s internal parsing introduces traceability points that advanced adversaries could exploit. In conclusion, SOCKS5 via PyProxy delivers higher anonymity and resistance against detection, making it more suitable for stealth or compliance-sensitive bulk operations. 5. Integration and Automation Compatibility Modern developers require proxies that integrate seamlessly with automation frameworks, browser emulators, and multi-threaded systems. PyProxy supports direct integration with Python, Node.js, and automation tools like Selenium, Puppeteer, and Playwright. Its SOCKS5 mode allows transparent routing without rewriting HTTP headers, simplifying code structure. For AI-driven scraping, load balancing, and rotation management, PyProxy provides APIs designed for programmatic scaling. Tamilblasters Proxy, being more HTTP-oriented, is compatible with browser automation tools but lacks the flexibility to handle non-web protocols. It may require additional middleware to convert traffic or manage connection persistence in large automation environments. While it can serve web-only scraping projects well, it struggles with complex workflows requiring adaptive switching between HTTP, HTTPS, and TCP-based tasks. For users running distributed or asynchronous systems, PyProxy’s multi-protocol support significantly reduces integration complexity and system resource consumption. 6. Reliability, Scalability, and Maintenance Reliability becomes a deciding factor in bulk operations. Intermittent disconnections or rate limits can collapse large-scale automation. PyProxy employs intelligent load balancing, automatic IP rotation, and region-based endpoint allocation. These features ensure uptime even under fluctuating demand. Moreover, its network nodes are distributed globally, minimizing latency regardless of the target geography. Tamilblasters Proxy typically uses fewer endpoint rotations and smaller IP pools. While this simplifies maintenance, it can lead to IP exhaustion or temporary bans under intense use. Users may need to manually replace proxies more frequently, increasing operational costs. Thus, PyProxy’s infrastructure is inherently designed for enterprise-grade scalability, whereas Tamilblasters Proxy remains more suitable for moderate-scale tasks. 7. Cost Efficiency and Value Proposition From a financial standpoint, the true cost of proxies is not only the subscription fee but also the efficiency per operation. Because PyProxy supports SOCKS5 alongside HTTP, users can choose the protocol best suited to each task, maximizing cost-effectiveness. In bulk testing, reduced downtime and fewer reconnections directly translate into lower total request costs. Tamilblasters Proxy may appear cheaper per unit, but its slower response rates and higher failure ratios can raise the effective cost per successful request. For projects relying on speed, uptime, and automation compatibility, PyProxy’s higher technical efficiency delivers better value. 8. Practical Recommendations For users deciding between PyProxy and Tamilblasters Proxy, the choice depends on operational scale and complexity: 1. For small-scale web scraping, browsing automation, or low concurrency tasks, Tamilblasters Proxy offers simplicity and affordability. 2. For large-scale automation, multi-threaded data extraction, SEO analysis, or account management systems, PyProxy with SOCKS5 support is the superior choice. 3. For security-critical or geo-targeted operations, PyProxy provides stronger encryption, anonymity, and adaptive IP rotation. In short, SOCKS5-based architectures such as PyProxy’s deliver flexibility, performance, and robustness that HTTP-based proxies rarely match in bulk scenarios. When comparing SOCKS5 and HTTP protocols through the lens of PyProxy and Tamilblasters Proxy, the results are clear. SOCKS5, exemplified by PyProxy, stands out for its low-level versatility, faster throughput, and superior anonymity—making it ideal for high-volume automation and professional-scale operations. Tamilblasters Proxy remains useful for simpler, web-focused tasks but lacks the architecture and scalability demanded by modern bulk workflows. Ultimately, businesses and developers aiming for sustainable, high-performance automation should prioritize PyProxy and the SOCKS5 protocol as their foundation, ensuring operational stability, security, and long-term efficiency.

Oct 29, 2025