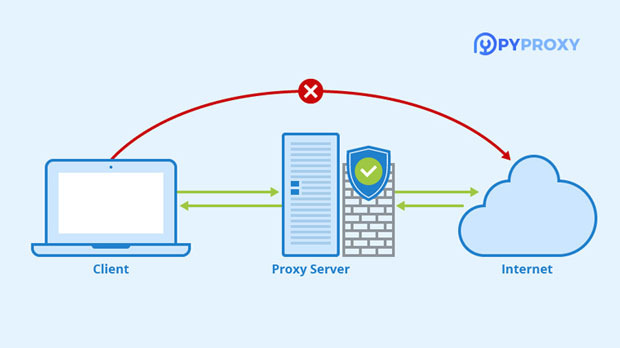

In today's digital age, enterprises are increasingly relying on web proxies as a critical tool for enhancing network security and access control. A web proxy acts as an intermediary between users and the internet, offering a range of benefits including traffic filtering, user authentication, data encryption, and protection from cyber threats. By strategically deploying web proxies, businesses can effectively regulate and monitor internet usage, safeguard sensitive data, and prevent unauthorized access to their networks. This article explores the significance of web proxies in improving network security and access control for businesses and provides an in-depth analysis of how organizations can implement and optimize web proxies to safeguard their digital infrastructure. Understanding Web Proxy: The BasicsA web proxy is a server that sits between a user's device and the internet, intercepting and handling requests from the user to web servers. It can be configured to filter content, log traffic, and enforce security policies. In essence, a web proxy is a protective shield that manages traffic flow, ensuring that only authorized content reaches the user's device. By filtering out malicious traffic and unauthorized websites, a proxy prevents threats such as malware, phishing attacks, and data breaches.One of the key functions of a web proxy is to manage and control the type of content that users can access. By filtering websites based on categories or specific rules, businesses can ensure that their employees are not exposed to harmful or distracting sites, while also enforcing compliance with organizational policies.The Role of Web Proxy in Enhancing Network Access Control1. Restricting Access Based on User IdentityWeb proxies can be integrated with authentication systems to verify the identity of users before allowing access to specific resources. This allows businesses to apply granular access controls based on the roles or responsibilities of individual employees. For instance, certain employees may have access to sensitive company data, while others may be restricted to non-critical information. Through the use of web proxies, businesses can set up policies that restrict internet access based on user identity. For example, a proxy can be configured to grant access to certain websites only for users within a specific department or with a particular clearance level. This ensures that sensitive information is only accessible by authorized personnel, reducing the risk of data leaks or breaches.2. Implementing IP and Location-Based RestrictionsAnother key feature of web proxies is the ability to enforce access controls based on the geographic location or IP address of the user. By examining the user's IP address, the web proxy can identify the origin of the request and enforce location-specific restrictions. For example, access to certain web resources can be limited to users within a specific office or geographical region, while users outside these boundaries may be denied access.This functionality is especially useful for businesses with multiple locations or a distributed workforce. By implementing IP and location-based restrictions, companies can reduce the risk of unauthorized access from external sources or malicious actors operating from high-risk regions.3. Granular Traffic Filtering and Content ControlOne of the primary functions of web proxies is the ability to filter internet traffic at a granular level. Proxies can block access to specific websites, content types, or even individual URLs based on predefined rules. This is particularly beneficial for preventing access to potentially harmful or inappropriate content, such as adult websites, gambling sites, or sites known for distributing malware.Additionally, proxies can filter content based on categories such as social media, news, or entertainment, allowing businesses to enforce acceptable use policies. By controlling what employees can and cannot access online, businesses can reduce the likelihood of productivity loss, prevent distractions, and safeguard against security threats.The Role of Web Proxy in Enhancing Network Security1. Protection Against Malware and Cyber AttacksWeb proxies act as a first line of defense against malware, ransomware, and other types of cyber threats. By scanning incoming traffic for known threats, proxies can block malicious downloads or suspicious websites before they reach the user's device. This is crucial for preventing the spread of malware within the network and mitigating the risk of a data breach.Moreover, web proxies can also be used to implement security protocols such as HTTPS, ensuring that communications between users and web servers are encrypted and secure. This encryption prevents third parties from intercepting sensitive data, such as login credentials or financial information, during transmission.2. Data Leak PreventionData leakage is a significant concern for businesses, especially when dealing with sensitive or confidential information. Web proxies can be configured to detect and prevent the unauthorized transmission of data outside the corporate network. For example, a proxy may block attempts to upload files to external storage services, email attachments, or websites that are not authorized by the organization.In addition to preventing data leakage, proxies can log all outgoing traffic, providing valuable insight into who accessed what data and when. This enables businesses to detect potential leaks early and take appropriate action.3. Anonymous Browsing and IP MaskingIn some cases, businesses may want to maintain anonymity while browsing the internet or interacting with external servers. Web proxies can mask the user's real IP address, replacing it with the proxy server's IP address. This helps protect the user's identity and shield the company’s internal network from being exposed to external entities.Moreover, web proxies can be used to bypass geo-restrictions or censorship, providing access to global content and services that might otherwise be blocked. This is particularly valuable for businesses with international operations or for employees who need to access content from restricted regions.4. Centralized Monitoring and LoggingA significant advantage of using a web proxy is the ability to centralize monitoring and logging of network activity. Proxies can record detailed logs of web requests, including the URLs accessed, the time of access, the user requesting the content, and the type of content requested. These logs can then be analyzed to detect unusual behavior, identify potential security threats, and ensure compliance with internal policies.With centralized monitoring, businesses can also generate reports on internet usage, which can help optimize bandwidth usage, track employee productivity, and provide insight into potential areas for security improvement.Best Practices for Implementing Web Proxy SolutionsTo maximize the benefits of web proxies, businesses should follow best practices when implementing these solutions:1. Regularly Update Proxy Filters and Rules: To keep up with emerging threats, businesses should regularly update proxy filters and access control rules. New malware sites and phishing attempts emerge constantly, so it is essential to keep the proxy system up to date.2. Integrate with Other Security Systems: Web proxies should be integrated with other security measures, such as firewalls, antivirus software, and intrusion detection systems, to provide a comprehensive defense against cyber threats.3. User Awareness and Training: Employees should be trained on the importance of web proxy usage and how to adhere to internet usage policies. Awareness of cyber risks can significantly reduce the chances of a security breach.4. Monitor and Review Logs Regularly: Logs should be reviewed periodically to ensure there are no security anomalies or policy violations. Automated tools can help flag suspicious activity, but manual checks add an additional layer of security.Web proxies are indispensable tools for enterprises seeking to strengthen their network access control and enhance security protection. By implementing robust proxy solutions, businesses can control internet usage, protect against cyber threats, and ensure that sensitive information remains secure. Proxies provide a flexible and scalable approach to managing internet traffic, enabling organizations to address specific security and access control challenges. When deployed correctly, web proxies offer a powerful defense mechanism that reduces the risk of cyberattacks, data leaks, and unauthorized access, while simultaneously boosting productivity and compliance within the workplace.

Nov 06, 2025